This keynote was delivered today at NWeLearn. The slides can also be found on Speaker Deck.

This is – I think (I hope) – the last keynote I will deliver this year. It’s the 11th that I’ve done. I try to prepare a new talk each time I present, in no small part because it keeps me interested and engaged, pushing my thinking and writing forward, learning. And we’re told frequently as of late that as the robots come to take our jobs, they will take first the work that can be most easily automated. So to paraphrase the science fiction writer Arthur C. Clarke, any keynote speaker that can be replaced by a machine should be.

That this is my last keynote of the year does not mean that I’m on vacation until 2016. If you’re familiar with my website Hack Education, you know that I spend the final month or so of each year reviewing everything that’s happened in the previous 12 months, writing an in-depth analysis of the predominant trends in education technology. I try in my work to balance this recent history with a deeper, longer view: what do we know about education technology today based on education technology this year, this decade, this century – what might that tell us about the shape of things to come.

In my review of “the year in ed-tech,” I look to see where the money’s gone – this has been a record-setting year for venture capital investment in education technology, for what it’s worth (over $3.76 billion). I look to see what entrepreneurs and engineers are building and what educators and students are adopting and what politicians are demanding, sanctioning. I look for, I listen to the stories.

Today I want to talk about three of these trends – “themes” is perhaps the more accurate word: austerity, automation, and algorithms.

I have the phrase “future of education” in the title to this talk, but I don’t want to imply that either austerity or automation or algorithms are pre-ordained. There is no inevitability to the future of education, to any of this – there is no inevitability to what school or technology will look like; there is no inevitability to our disinvestment in public education. These are deeply political issues – issues of labor, information, infrastructure, publicness, power. We can (we must) actively work to mold this future, to engage in public and political dialogue and not simply hand over the future to industry.

Austerity looms over so much of what is happening right now in education. Between 1987 and 2012, the share of revenue that Washington State University received from Washington state, for example, fell from 52.8% to 32.3%. Boise State University saw its state support fall from 64.7% of its revenue to 30.3%. The University of Oregon, from 35.8% to 9.3%.

Schools are now tasked to “do more with less,” which is often code, if you will, for utilizing digital technologies to curb “inefficiencies” and to “scale” services. The application of the language and practices of scientific management to education isn’t new. Not remotely. Raymond Callahan wrote a book called Education and the Cult of Efficiency in 1962, for example, that explored the history and the shape of school administration through this very lens. Measurement. Assessment. “Outcomes.” Data. Standardization. The monitoring and control of labor. And in many ways this has been the history of, the impetus behind education technology as well. Measurement. Assessment. “Outcomes.” Data. Standardization. The monitoring and control of labor. Education technology is, despite many of our hopes for something else, for something truly transformational, often a tool designed to meet administrative goals.

As Seymour Papert – MIT professor and probably one of the most visionary people in education technology – wrote in his 1993 book The Children’s Machine,

Little by little the subversive features of the computer were eroded away: Instead of cutting across and so challenging the very idea of subject boundaries, the computer now defined a new subject; instead of changing the emphasis from impersonal curriculum to excited live exploration by students, the computer was now used to reinforce School’s ways. What had started as a subversive instrument of change was neutralized by the system and converted into an instrument of consolidation.

For Papert, that consolidation involved technology, administration, and (capital-S) School. Under austerity, however, we must ask what happens to “School’s ways.” Do they increasingly become “businesses’ ways,” “markets’ ways”? What else might “the subversive features of the computer” serve to erode – and erode in ways (this is what concerns me) that are far from optimal for learning, for equity, for justice?

I first included “Automation and Artificial Intelligence” as one of the major trends in education technology in 2012. 2012 was, if you recall, deemed “The Year of the MOOC” by The New York Times. I was struck at the time by how the MOOCs that the media were suddenly obsessed with all emerged from elite universities’ artificial intelligence labs. Udacity’s founder Sebastian Thrun, Coursera’s founders Andrew Ng and Daphne Koller were from Stanford University’s Artificial Intelligence Laboratory; Anant Agarwal, the head of edX, from MIT’s Computer Science and Artificial Laboratory.

I wondered then, “How might the field of artificial intelligence – ‘the science of creating intelligent machines’ – shape education?” Or rather, how does this particular discipline view “intelligence” and “learning” of machines and how might that be extrapolated to humans?

The field of artificial intelligence relies in part on a notion of “machine learning” – that is, teaching computers to adapt their behaviors algorithmically (in other words, to “learn”) – as well as on “natural language processing” – that is, teaching computers to understand input from humans that isn’t written in code. (Yes, this is a greatly oversimplified explanation. I didn’t last too long in Sebastian Thrun’s AI MOOC.)

Despite the popularity and the hype surrounding MOOCs, Thrun may still be best known for his work on the Google self-driving car. And I’d argue we can look at autonomous vehicles – not only the technology, but the business, the marketing, the politics – and see some connections to how AI might construe education as well. If nothing else, much like the self-driving car with its sensors and cameras and knowledge of the Google-mapped-world, we are gathering immense amounts of data on students via their interactions with hardware, software, websites, and apps. And more data, so the argument goes, equals better modeling.

Fine-tuning these models and “teaching machines” has been the Holy Grail for education technology for a hundred years – that is to say, there has long been a quest to write software that offers “personalized feedback,” that responds to each individual student’s skills and needs. That’s the promise of today’s “adaptive learning,” the promise of both automation and algorithms.

What makes ed-tech programming “adaptive” is that the AI assesses a student’s answer (typically to a multiple choice question), then follows up with the “next best” question, aimed at the “right” level of difficulty. This doesn’t have to require a particularly complicated algorithm, and the idea actually based on “item response theory” which dates back to the 1950s and the rise of the psychometrician. Despite the intervening decades, quite honestly, these systems haven’t become terribly sophisticated, in no small part because they tend to rely on multiple choice tests.

And yet the marketing surrounding these adaptive learning systems is full of wildly exaggerated claims about their potential and their capabilities. The worst offender: Knewton.

In August, Knewton announced that it was making its “adaptive learning” engine available to anyone (not just to the textbook companies to whom it had previously licensed its technology). Educators can now upload their own materials to Knewton – videos, assignments, quizzes, and so on – and Knewton says it will assess how well each piece of content works for each student.

“Think of it as a friendly robot-tutor in the sky,” Jose Ferreira, Knewton founder and CEO, said in the company’s press release. “Knewton plucks the perfect bits of content for you from the cloud and assembles them according to the ideal learning strategy for you, as determined by the combined data-power of millions of other students.” "“We think of it like a robot tutor in the sky that can semi-read your mind and figure out what your strengths and weaknesses are, down to the percentile,” Ferreira told NPR.

A couple of years ago, he was giving similar interviews: “What if you could learn everything,” was the headline in Newsweek.

Based on the student’s answers [on Knewton’s platform], and what she did before getting the answer, “we can tell you to the percentile, for each concept: how fast they learned it, how well they know it, how long they’ll retain it, and how likely they are to learn other similar concepts that well,” says Ferreira. “I can tell you that to a degree that most people don’t think is possible. It sounds like space talk.” By watching as a student interacts with it, the platform extrapolates, for example, “If you learn concept No. 513 best in the morning between 8:20 and 9:35 with 80 percent text and 20 percent rich media and no more than 32 minutes at a time, well, then the odds are you’re going to learn every one of 12 highly correlated concepts best that same way.”

“We literally know everything about what you know and how you learn best, everything” Ferreira says in a video posted on the Department of Education website. “We have five orders of magnitude more data about you than Google has. …We literally have more data about our students than any company has about anybody else about anything, and it’s not even close.”

The way that Knewton describes it, this technology is an incredible, first-of-its-kind breakthrough in “personalization” – that is, the individualization of instruction and assessment, mediated through technology in this case. “Personalization” as it’s often framed it meant to counter the “one-size-fits-all” education that, stereotypically at least, the traditional classroom provides.

And if the phrase “semi-read your mind” didn’t set off your bullshit detector, I’ll add this: there’s a dearth of evidence – nothing published in peer-reviewed research journals – that Knewton actually works. (We should probably interrogate what it means when companies or researchers say that any ed-tech “works.” What does that verb mean?) Knewton surely isn’t, as Ferreira described it in an interview with Wired, “a magic pill.”

Yet that seems to be beside the point. The company, which has raised some $105 million in venture capital and has partnered with many of the world’s leading textbook publishers, has tapped into a couple of popular beliefs: 1) that instructional software can “adapt” to each individual student and 2) that that’s a desirable thing.

The efforts to create, as Ferreira puts it, a “robot-tutor” are actually quite long-running. Indeed the history of education technology throughout the twentieth and now twenty-first centuries could be told by looking at these endeavors. Even the earliest teaching machines – those developed before the advent of the computer – strove to function much like today’s “adaptive technologies.” These devices would allow students, their inventors argued, to “learn at their own pace,” a cornerstone of “personalization” via technology.

It’s easy perhaps to scoff at the crudity of these machines, particularly when compared to the hype and flash from today’s ed-tech industry. Ohio State University professor Sidney Pressey built the prototype for his device “the Automatic Teacher” out of typewriter parts, debuting it at the 1924 American Psychological Association meeting. A little window displayed a multiple choice question, and the student could press one of four keys to select the correct answer. The machine could be used to test a student – that is, to calculate how many right answers were chosen overall; or it could be used to “teach” – the next question would not be revealed until the student got the first one right, and a counter would keep track of how many tries it took.

Ed-tech might look, on the surface, to be fancier today, but these are still the mechanics that underlies so much of it.

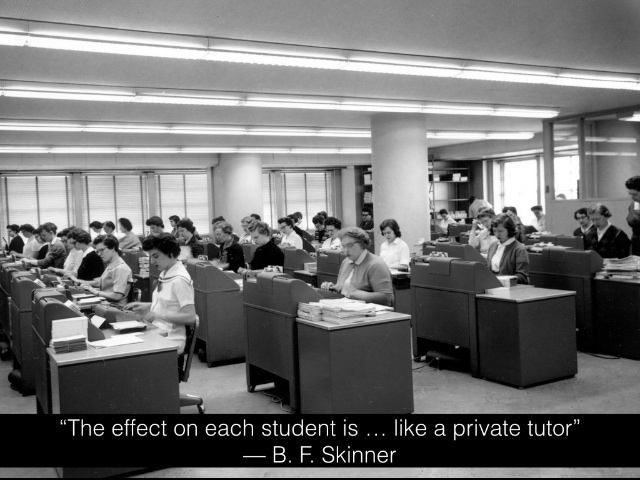

It’s Harvard psychology professor and radical behaviorist B. F. Skinner who is often credited as the inventor of the first teaching machine, even though Pressey’s work predated Skinner’s by several decades. Skinner insisted that his machines were different: while Pressey’s tested students on material they’d already been exposed to, Skinner claimed his teaching machine facilitated teaching, introducing students to new concepts in incremental steps.

This incrementalism was important for Skinner because he believed that the machines could be used to minimize the number of errors that students made along the way, maximizing the positive reinforcement that students received. (This was a key feature of his behaviorist model of learning.) Educational materials should be be broken down into small chunks and organized in a logical fashion for students to move through. Skinner called this process “programmed instruction.”

In some ways, this is akin to what Knewton claims to do today: taking the content associated with a particular course or textbook and breaking it down into small “learning objects.” Again, to quote Ferreira in the company’s latest press release, “Knewton plucks the perfect bits of content for you from the cloud and assembles them according to the ideal learning strategy for you, as determined by the combined data-power of millions of other students.”

That assembly of content based on “data-power” is distinct from what either Pressey or Skinner’s machines could do. That capability, of course, is a result of the advent of the computer. Students using these early teaching machines still had to work through the type and the order of questions as these machines presented them; they could only control the speed with which they progressed (although curriculum, as designed to be delivered by teacher or machine, typically does progress from simple to difficult). Nevertheless the underlying objective – enhanced by data and algorithms or not – has remained the same for the last century: create a machine that works like a tutor. As Skinner wrote in 1958,

The machine itself, of course, does not teach. It simply brings the student into contact with the person who composed the material it presents. It is a laborsaving device because it can bring one programmer into contact with an indefinite number of students. This may suggest mass production, but the effect upon each student is surprisingly like that of a private tutor.

A private tutor.

It’s hardly a surprise that echoing this relationship has been a goal that education technology has strived for. A private tutor is often imagined to be the best possible form of instruction, superior to “whole class instruction” associated with the public school system. And it’s what royalty and the rich have provided their children historically; parents can still spend a great deal of money in providing a private tutor for their child.

A tutor is purportedly the pinnacle of “individualized instruction.” Some common assumptions: A tutor pays attention – full attention – to only one student. The lessons are crafted and scaffolded specifically for that student, and a tutor does not move on to the next lesson until the student masters the concept at hand. A tutor offers immediate feedback, stepping in to correct errors in reasoning or knowledge. A tutor keeps the student on task and sufficiently motivated, through a blend of subject-matter expertise, teaching skills, encouragement, compassion, and rigor. But a student can also take control of the exchange, asking questions or change the topic. Working with a tutor, student is a more actively engaged learner – indeed, a tutor provides an opportunity for a student to construct their own knowledge not simply receive knowledge instruction.

Now some of these strengths of tutors may be supposition or stereotype. Nonetheless, the case for tutoring was greatly reinforced by education psychologist Benjamin Bloom who, in 1984, published his article “The Two Sigma Problem” that found that “the average student under tutoring was about 2 standard deviations above the average of the control class,” a conventional classroom with one teacher and 30 students. Tutoring is, Bloom argued, “the best learning conditions we can devise.”

But here’s the challenge that Bloom identified: one-to-one tutoring is “too costly for most societies to bear on a large scale.” It might work for the elite, but one tutor for every student simply won’t work for public education.

Enter the computer – and a rekindling of interesting in building “robot tutors.”

While the “robot tutor” can trace its lineage through the early teaching machines of Pressey and Skinner, it owes much to the work of early researchers in artificial intelligence as well, and as such, the successes and failures of “intelligent tutoring systems” can be mapped to a field that was, at its outset in the early 1950s, was quite certain it was on the verge of creating a breakthrough in “thinking machines.” And once we could could build a machine that thinks, surely we could build machines to teach humans how to think and how to learn. “Within a generation,” MIT AI professor Marvin Minsky famously predicted in 1967, “the problem of creating ‘artificial intelligence’ will substantially be solved.”

The previous year, Stanford education professor Patrick Suppes made a similar pronouncement in the pages of Scientific American:

One can predict that in a few more years millions of school children will have access to what Philip of Macedon’s son Alexander enjoyed as a royal prerogative: the personal services of a tutor as well-informed and responsive as Aristotle.

Of course, we have yet to solve the problems of artificial intelligence, let alone the problem of creating “intelligent tutoring systems,” despite decades of research.

These systems – “robot tutors” – have to be able to perform a number of tasks, far more complex than those that early twentieth century teaching machines could accomplish. To match the ideal humor tutor, robot tutors have to be programmed with enough material to be subject matter experts (or at least experts in the course materials. That’s not new or unique – pre-digital teaching machines did that too.) But they also should be able to respond with more nuance than just “right” or “wrong.” They must be able to account for what students’ misconceptions mean – why does the student choose the wrong answer. Robot tutors need to assess how the student works to solve a problem, not simply assess whether they have the correct answer. They need to provide feedback along the way. They have to be able to customize educational materials for different student populations – that means they have to be able to have a model for understanding what “different student populations” might look like and how their knowledge and their learning might differ from others. Robot tutors have to not just understand the subject at hand but they have to understand how to teach it too; they have to have a model for “good pedagogy” and be able to adjust that to suit individual students’ preferences and aptitudes. If a student asked a question, a robot would have to understand that and provide an appropriate response. All this (and more) has to be packaged in a user interface that is comprehensible and that doesn’t itself function as a roadblock to a student’s progress through the lesson.

These are all incredibly knotty problems.

In the 1960s, many researchers began to take advantage of mainframe computers and time-sharing to develop computer assisted instructional (CAI) systems. Much like earlier, pre-digital teaching machines, these were often fairly crude – as Mark Urban-Lurain writes in his history of intelligent tutoring systems, “Essentially, these were automated flash card systems, designed to present the student with a problem, receive and record the student’s response, and tabulate the student’s overall performance on the task.” As the research developed, these systems began to track a student’s previous responses and present them with materials – different topics, different complexity – based on that history.

Early examples of CAI include the work of Patrick Suppes and Richard Atkinson at their lab at Stanford, the Institute for Mathematical Studies in the Social Sciences. They developed a system that taught reading and arithmetic to elementary students in Palo Alto as well as in rural Kentucky and Mississippi. In 1967, the two founded the Computer Curriculum Corporation, which sold CAI systems – mainframes, terminals, and curriculum – to schools.

(The company was acquired in 1990 by Simon & Schuster, which a few years later sold the CAI software to Pearson.)

Meanwhile, at the University of Illinois Urbana-Champaign, another CAI system was under development, no small part due to the work by then graduate student Don Bitzer on the university’s ILLIAC–1 mainframe. He called it Programmed Logic for Automatic Teaching Operations or PLATO. Bitzer recognized that the user interface would be key for any sort of educational endeavor, and as such he thought that the terminals that students used should offer more than text-based Teletype, which at the time was the common computer interface. Bitzer helped develop a screen that could display both text and graphics. And more than just display, the screen enabled touch. PLATO boasted another feature too: the programming language TUTOR which (ostensibly at least) allowed anyone to build lessons for the system.

(Much like CCC, PLATO was eventually spun out of the university lab into a commercial product, sold by the Control Data Corporation. CDC sold the trademark “PLATO” in 1989 to The Roach Organization which changed its name to PLATO Learning. Another online network based on PLATO called NovaNet was developed by the UIUC, later purchased by National Computer Systems which in turn was acquired by Pearson. NovaNet was only recently sunsetted: on August 31 of this year. Pearson is also, of course, an investor in Knewton.)

Work on computer-assisted instruction continued in the 1970s and 1980s – the term “intelligent tutoring system” (ITS) was coined in 1982 by D. Sleeman and J.S. Brown. Research focused on modeling student knowledge, including their misconceptions or “bugs” that led to their making errors. In general, artificial intelligence had also made strides in natural language processing that boosted computers’ capabilities to process students’ input and queries – beyond their choosing one answer in a multiple choice question. The emerging field of cognitive science also began to shape research in education technology as well. Intelligent tutoring systems have remained one of the key areas in the overlap between AI and ed-tech research.

Nevertheless many of the early claims about the coming of “robot tutors” were also challenged early, in no small part because buying these systems proved to be even more expensive for schools and districts than hiring human teachers. And as MIT researcher Ronni Rosenberg wrote in a 1987 review of the “robot tutor” literature,

My readings convinced me that ITS work is characterized by two major methodological flaws. First, ITSs are not well grounded in a model of learning; they seem more motivated by available technology than by educational needs. Many of the systems sidestep altogether the critical problem of modeling the tutoring process and the ones that do try to shed light on cognitive theories of learning do not provide convincing evidence for their theories. What almost all the systems do model, implicitly, is a single learning scheme that is hierarchical, top-down, goal-driven, and sequential. Most of the researchers appear to take for granted that this is the best (if not the only) style of learning, a point that is disputed by educational and social science researchers.

Second, positive claims for ITSs are based on testing that typically is poorly controlled, incompletely reported, inconclusive, and in some cases totally lacking.

So in the almost thirty years since Ronenberg’s article, has there been a major breakthrough in “robot tutors”? Not really. Rather there’s been a steady progress of work on the topic, driven in part by a belief in the superiority of individualized instruction as exemplified by a human tutor. And there’s a ton of marketing hype. We seem to love the story of a “robot tutor.”

As I joked at the beginning of this keynote, the discussions and development of automation are fundamentally labor issues: the robots, we’re told, are coming for our jobs.

Now everyone from B. F. Skinner to Knewton’s CEO Jose Ferreira likes to insist that they don’t intend for their machines to replace teachers. (Sometimes I think they doth protest too much.) Nevertheless, the notion of a “robot tutor” – as a replacement for a human tutor or as an augmentation to a human teacher – still raises important questions about how exactly we view the labor of teaching and learning. As I argued in a talk earlier this year at the University of Wisconsin, Madison, there are things that computers cannot do – things at the very core of the project of education, things at the very core of our work: computers cannot, computers do not care. It may be less that our machines are becoming more intelligent; it may be that we humans are becoming more like machines.

I was recently contacted by a writer for Slate magazine who was working on a story on adaptive technologies. We all know the plot by now: these stories stress the fact that this software is “reshaping the entire educational experience in some settings.” (That last phrase – “in some settings” – is key.) That teachers no longer “teach”; they tutor. They walk around the class and help when an individual student gets stuck. Students can move at their own pace. “This strikes me as a promising approach in a setting like a developmental math course at a community college,” the journalist wrote to me. And I asked him why the most vulnerable students should get the robot – a robot, remember, that does not care.

A 2011 article by Arizona State University’s Kurt VanLehn reviewed the literature on human and intelligent tutoring systems found that the effect size of the latter was .76, far below Bloom’s famous “two sigma” claim. But VanLehn found that, broadening his analysis beyond the limited number of studies Bloom included, human tutoring also failed to attain that storied 2.0 effect size. The effect of human tutoring, according to VanLehn’s literature review, was only .79. In other words, “robot tutors” might be almost as effective as humans – at least, as measured by the ways in which researchers have established their experiments to gauge “effectiveness.” (There are numerous caveats about VanLehn’s findings including the content areas, the problem sets, the student populations excluded, and the different types of ITS systems included.)

Overall the research on “robot tutors” is pretty mixed. As MIT education researcher Justin Reich has pointed out, “Some rigorous studies show no effects of adaptive systems as compared to traditional instruction, and others show small to moderate effects. In the aggregate, most education policy experts don't consider it to be a reliability effective approach to improve mathematics learning.”

Robot tutors are not, as Knewton’s CEO has boasted, “magic pills.”

So as Reich says, we should be cautious about what exactly these adaptive systems can really do and if it’s the type of thing we really value. Reich writes that,

Much of what we care most about in the mathematics of the future – the ability to find problems in complex scenarios, the ability to create appropriate models of those problems, and the ability to articulate and defend the rationale for particular solutions – is nearly impossible to test using computer graded systems. Most of what computer assisted instructional systems can evaluate are student computational skills, which are exactly the kinds of things that computers are much better at doing than human beings.

“Robot tutors,” it is worth noting, are not the only path that computers in education can follow. As Seymour Papert wrote in his 1980 book Mindstorms, computers have mostly been used to replicate existing educational practices:

In most contemporary educational situations where children come into contact with computers the computer is used to put children through their paces, to provide exercises of an appropriate level of difficulty, to provide feedback, and to dispense information. The computer programming the child.

Papert envisioned instead “the child programming the computer,” and he believed that computers could be a powerful tool for students to construct their own knowledge. That’s “personalization” that a skilled human tutor could help facilitate – fostering student inquiry and student agency. But “robot tutors,” despite the venture capital and the headlines, can’t yet get beyond their script.

And that’s a problem with algorithms as well.

Algorithms increasingly drive our world: what we see on Facebook or Twitter, what Amazon or Netflix suggest we buy or watch, the search results that Google returns, our credit scores, whether we’re selected by TSA for additional security, and so on. Algorithms drive educational software too – this is the boast of a company like Knewton. But it’s the claim of other companies as well: how TurnItIn can identify plagiarism, how Civitas Learning can identify potential drop-outs, how Degree Compass can recommend college classes, and so on.

Now I confess, I’m a literature person. I’m a cultural studies person. I’m not a statistics person. I’m not a math person. Some days I play the role of a technology person, but only on the Internet. So although I want to push for more “algorithmic transparency,” a counter-balance to what law professor Frank Pasquale has called “the black box society,” it’s not like showing me the code is really going to do much good. But we can nevertheless, I think, still look at the human inputs – the culture, the values, the goals of the engineers and entrepreneurs – and ask questions about the algorithms and the paths they want to push students down.

(I saw a headline from MIT this morning – “Why Self-Driving Cars Must Be Programmed to Kill” – for an article calling for “algorithmic morality.” I reckon that is something for the humanities scholars and the social scientists among us to consider; not simply the computer scientists at MIT.)

We can surmise what feeds the algorithms that drive educational technologies. It is, as I noted earlier, often administrative goals. This might include: improving graduation rates, improving attendance, identifying classes that students will easily pass. (That is not to say these might not be students’ goals as well; it’s just that they are distinct, I’d contend, from learning goals.) The algorithms that drive ed-tech often serve the platform or the software’s goals too: more clicking, which means more “engagement,” which does not necessarily mean more learning, but it can make for a nice graph for you to show your investors.

Algorithms circumscribe, all while giving us the appearance of choice, the appearance of personalization: Netflix thinks you’ll like the new Daredevil series, for example, based on the fact you’ve watched Thor half a dozen times. But it won’t, it can’t suggest you pick up and read Marvel’s Black Panther. And it won’t suggest you watch the DC animated comics series, either, because it no longer has a license to stream them. What’s the analogy of this to education? What are algorithms going to suggests? What does that recommendation engine look like?

And who gets this “algorithmic education”? Who experiences automated education? Who will have robot tutors? Who will have caring and supportive humans as teachers and mentors?

Serendipity and curiosity are such important elements in learning. Why would we engineer those out of our systems and schools? (And which systems and which schools?)

Austerity. I think that can explain (partially) why.

Many of us in education technology talk about this being a moment of great abundance – information abundance – thanks to digital technologies. But I think we are actually / also at a moment of great austerity. And when we talk about the future of education, we should question if we are serving a world of abundance or if we are serving a world of austerity. I believe that automation and algorithms, these utterly fundamental features of much of ed-tech, do serve austerity. And it isn’t simply that “robot tutors” (or robot keynote speakers) are coming to take our jobs; it’s that they could limit the possibilities for, the necessities of care and curiosity.

That’s not a future I want for anyone.